Update: follow the batlasids tag trail for follow-ups.

Back in February, I blogged about clean URLs and feed aggregation. In March, we learned about the ORE specification for mapping resource aggregations in Atom XML, just as we were gearing up to start work on the Concordia project, with support from the US National Endowment for the Humanities and the UK Joint Information Services Committee.

Our first workshop was held in May. One of the major outcomes was a to-do for me: provide a set of stable identifiers for every citable geographic feature in the Barrington Atlas so collaborators could start publishing resource maps and building interoperation services right away, without waiting for the full build-out of Pleiades content (which will take some time).

The first fruits can be downloaded at: http://atlantides.org/batlas/ . All content under that URL is licensed cc-by. Back versions are in dated subdirectories.

There you'll find XML files for 3 of the Atlas maps (22, 38 and 65). There's only one feature class for which we don't provide IDs: roads. More on why not another time. I'll be adding files for more of the maps as quickly as I can, beginning with Egypt and the north African coast west from the Nile delta to Tripolitania (the Concordia "study area"). Our aim is full coverage for the Atlas within the next few months.

What do you get in the files?IDs (aka aliases) for every citable geographic feature in the

Barrington Atlas. For example:

- BAtlas 65 G2 Ouasada = ouasada-65-g2

If you combine one of these aliases with the "uribase" also listed in the file (http://atlantides.org/batlas/) you get a

Uniform Resource Identifier for that feature (this should answer

Sebastian Heath's question).

For features with multiple names, we provide multiple aliases to facilitate ease of use for our collaborators. For example, for BAtlas 65 A2 Aphrodisias/Ninoe, any of the following aliases are valid:

- aphrodisias-ninoe-65-a2

- aphrodisias-65-a2

- ninoe-65-a2

Features labeled in the Atlas with only a number are also handled. For example, BAtlas 38 C1 no. 9 is glossed in the

Map-by-Map Directory with the location description (modern names): "Siret el-Giamel/Gasrin di Beida". So, we produce the following aliases, all valid:

- (9)-38-c1

- (9)-siret-el-giamel-gasrin-di-beida-38-c1

- (9)-siret-el-giamel-38-c1

- (9)-gasrin-di-beida-38-c1

Most unlabeled historical/cultural features also get identifiers. For example:

- Unnamed aqueduct at Laodicea ad Lycum in BAtlas 65 B2 = aqueduct-laodicea-ad-lycum-65-b2

- Unnamed bridge at Valerian in BAtlas 22 B5 = bridge-valeriana-22-b5

Unlocated toponyms and false names (appearing only in the

Map-by-Map Directory) get treated like this:

- BAtlas 22 unlocated Acrae = acrae-22-unlocated

- BAtlas 38 unlocated Ampelos/Ampelontes? = ampelos-ampelontes-38-unlocated = ampelos-38-unlocated = ampelontes-38-unlocated

- BAtlas 65 false name ‘Itoana’ = itoana-65-false

The XML files also provide associated lists of geographic names, formatted BAtlas citations and other information useful for searching, indexing and correlating these entries with your own existing datasets. What you don't get is coordinates. That's what the Pleiades legacy data conversion work is for, and it's a slower and more expensive process.

Read on to find out how you can start using these identifiers now, and get links to the corresponding Pleiades data automatically as it comes on line over time.

Why do we need these identifiers?Separate digital projects would like to be able to refer unambiguously to any ancient Greek or Roman geographic feature using a consistent, machine-actionable scheme. The

Barrington Atlas is a stable, published resource that can provide this basis if we construct the corresponding IDs.

Even without coordinates, other projects can begin to interoperate with each other immediately, as long as they have a common scheme of identifiers. After using BAtlas URIs to normalize, control or annotate their geographic description, they can publish services or crosswalks that provide links for the relationships within and between their datasets. For example, for each record in a database of coins you might like links to all the other coins minted by the same city, or to digital versions (in other databases) of papyrus documents and inscriptions found at that site.

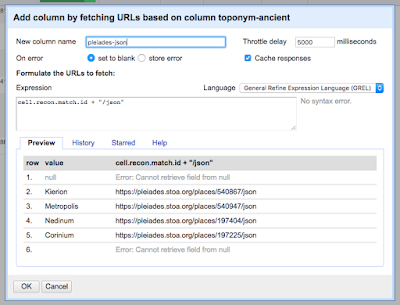

Moreover, we would like other projects to start using a consistent identifier scheme now, so that as Pleiades adds content we can build more interoperation around it (e.g., dynamic mapping, coordinate lookup, proximity search across multiple collections). To that end, Pleiades will provide

redirects (303 see other) from

Barrington Atlas URIs (following the scheme described here) as follows:

- If a corresponding entry exists in Pleiades, the web browser will be redirected to that Pleiades page automatically

- If there is not yet a corresponding entry in Pleiades, the web browser will be redirected to an HTML page providing a full human-readable citation of the Atlas, as well as information about this service

So, for example:

- http://atlantides.org/batlas/aphrodisias-ninoe-65-a2 will re-direct to http://pleiades.stoa.org/places/638753

- http://atlantides.org/batlas/vlahii-22-e4 will re-direct to http://atlantides.org/batlas/vlahii-22-e4.html until there is a corresponding Pleiades record

The HTML landing pages for non-Pleiades redirects are not in place yet, but we're working on it. We'll post again when that's working.

Why URIs for a discretely citable feature in a real-world, printed atlas?I'll let

Bizer, Cyganiak and Heath explain the naming of resources with URI references. In the parlance of "Linked Data on the Web,"

Barrington Atlas features are "non-information resources"; that is, they are non-digital/real-world discrete entities about which web authors and services may want to make assertions or around which to perform operations. What we are doing is creating a stable system for identifying and citing these resources so that those assertions and operations can be automated using standards-compliant web mechanisms and applications. The HTML pages to which web browsers will be automatically redirected constitute "information resources" that describe the "non-information resources" identified by the original URIs.

HowIf I get a comment box full of requests for a blow-by-blow description of the algorithm, I'll post something on that. If you're really curious and energetic, have a look at

the code. It's intended mostly for short-term, internal use, so it's not marvelously documented. Yes, it's a hack.

One of the big headaches was deciding how to decompose the complex labels into simple, clean ASCII strings that can be legal URL components.

Sean blogged about that, and wrote

some code to do it, shortly after the workshop.

Credit where credit is dueSean and I had a lot of help from the workshop participants (Ben Armintor, Gabriel Bodard, Hugh Cayless, Sebastian Heath, Tim Libert, Sebastian Rahtz and Charlotte Roueché) in sorting out what to do here. Older, substantive conversations that informed this process (with these folks and others; notably Rob Chavez, Greg Crane, Ruth Mostern, Dan Pett, Ross Scaife†, Patrick Sims-Williams, Linda Smith and Neel Smith) go back as far as 2000, shortly after the Atlas was published.

Many thanks to all!

Examples in the WildSebastian Rahtz has already mocked up an example service for the

Lexicon of Greek Personal Names. It takes a BAtlas alias and returns you all the name records in their system that are associated with the corresponding place. So, for example:

- http://clas-lgpn2.class.ox.ac.uk/batlas/aloros-50-b3

This is just one of several services that LGPN is developing. See the LGPN

web services page, as well as the

LGPN presentation to the Digital Classicist Seminar in London last month.

Sebastian Heath, for some time, has been incorporating Pleiades identifiers into the database records of the

American Numismatic Society. He has

blogged about that work in the context of Concordia.

Do you have an application? Let me know!